I’ve always thought it would be cool to be able to compose music in code. There are some languages out there that do this, but I was more interested in direct midi programming than in a DSL for composing music. Enter mido.

mido is a midi library for Python that wraps the lower-level midi library python-rtmidi in a friendlier API. It can do a lot of things, but I was primarily interested in using code to control a synth in Bitwig Studio. I ended up writing a little step sequencer API. I used to love playing with Seq 303 back in the 90s, and that’s sort of what I had in mind in terms of how to model midi notes into a song.

I started by defining a Note class.

# note.py

import mido

class Note:

# http://bradthemad.org/guitar/tempo_explanation.php

LENGTHS = {

'w': 240,

'h': 120,

'q': 60,

'e': 30,

's': 15,

't': 7.5,

'dq': 90,

'de': 45,

'ds': 22.5,

'tq': 40,

'te': 20,

'ts': 10

}

# what is the equivalent of '1' numeric duration value

DURATION_MULTIPLIER = LENGTHS['q']

def __init__(self, value:int, duration, multiplier=1, velocity:int=64):

self.value = value

self.duration = duration

self.multiplier = multiplier

self.velocity = velocity

# Converts a note length value like dotted eighth to a value

# in seconds based on default or provided bpm tempo.

# Length abbrs: w, h, q, e, s, t, dq, de, ds, tq, te, ts

# (whole/half/quarter/eight/sixteenth/32nd, dotted/triplet)

def seconds(self, tempo:int):

if type(self.duration) == str:

if self.duration in Note.LENGTHS:

len = Note.LENGTHS[self.duration]

return len * self.multiplier / tempo

else:

return 0

else:

return self.duration * Note.DURATION_MULTIPLIER / tempo

# Returns mido "note_on" message

def get_on(self):

print(f"On {self.value} {self.duration}")

return mido.Message('note_on', note=self.value,

velocity=self.velocity)

# Returns mido "note_off" message

def get_off(self):

print(f"Off {self.value} {self.duration}")

return mido.Message('note_off', note=self.value)And then a basic sequencer to play them.

# sequencer.py

import concurrent

from concurrent.futures import ThreadPoolExecutor

import mido

from threading import Thread

import time

from note import Note

class Step:

def __init__(self, value:int, duration:int=1, start_tick:int=0):

self.enabled = True

self.start_tick = start_tick

self.adjust_duration_for_start_tick = True

self.note = Note(value, duration)

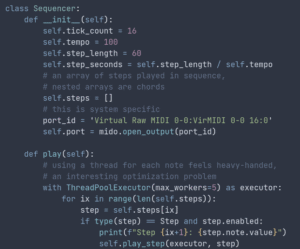

class Sequencer:

def __init__(self):

self.tick_count = 16

self.tempo = 100

self.step_length = 60

self.step_seconds = self.step_length / self.tempo

# an array of steps played in sequence,

# nested arrays are chords

self.steps = []

# this is system specific

port_id = 'Virtual Raw MIDI 0-0:VirMIDI 0-0 16:0'

self.port = mido.open_output(port_id)

def play(self):

# using a thread for each note feels heavy-handed,

# an interesting optimization problem

with ThreadPoolExecutor(max_workers=5) as executor:

for ix in range(len(self.steps)):

step = self.steps[ix]

if type(step) == Step and step.enabled:

print(f"Step {ix+1}: {step.note.value}")

self.play_step(executor, step)

elif type(step) == list:

print(f"Step {ix+1}: {self.chord_str(step)}")

for substep in step:

self.play_step(executor, substep)

time.sleep(self.step_seconds)

def play_step(self, executor, step):

future = executor.submit(note_player, self, step)

future.add_done_callback(note_finished)

def chord_str(self, steps):

return [step.note.value for step in steps]

def note_player(seq:Sequencer, step:Step):

applied_length = seq.step_length / seq.tempo

delay_seconds = step.start_tick * ( applied_length ) / seq.tick_count

note_seconds = step.note.seconds(seq.tempo)

if delay_seconds > 0:

time.sleep(delay_seconds)

if step.adjust_duration_for_start_tick:

note_seconds = note_seconds - delay_seconds

seq.port.send(step.note.get_on());

time.sleep(note_seconds)

seq.port.send(step.note.get_off());

return step

def note_finished(future:concurrent.futures.Future):

print(f"Finished: {future.result().note.value}")

And finally, a little celebratory tune.

# tada.py

from sequencer import Sequencer, Step

step1 = Step(60, 1)

step2 = Step(64, 1)

step3 = Step(67, 1)

step4 = Step(72, 1)

step1a = Step(60, 4, 1)

step2a = Step(64, 4, 2)

step3a = Step(67, 4, 3)

step4a = Step(72, 4, 4)

steps = [step1, step2, step3, step4 ]

steps.append([step1a, step2a, step3a, step4a])

seq = Sequencer()

seq.steps = steps

seq.play()Here’s the output of python tada.py.

Step 1: 60

On 60 1

Off 60 1

Finished: 60

Step 2: 64

On 64 1

Step 3: 67

Off 64 1

Finished: 64

On 67 1

Step 4: 72

On 72 1

Off 67 1

Finished: 67

Step 5: [60, 64, 67, 72]

Off 72 1

Finished: 72

On 60 4

On 64 4

On 67 4

On 72 4

Off 60 4

Finished: 60

Off 64 4

Finished: 64

Off 67 4

Finished: 67

Off 72 4

Finished: 72And here’s what it sounds like running through the Bitwig Organ.

If you want to run this locally, you’ll need a midi device to convert the midi notes into sound. For me, that is Bitwig Studio. It might just work using the built-in software synth on Windows, but I haven’t tried it.

You’ll also need to install some python packages. Here’s the requirements.txt.

mido

python-rtmidi

importlib_metadataAnd here are shell commands to create a virtual environment, install these requirements, and run the program (I’m using bash).

mkdir midosequencer

cd midosequencer

python -m venv .venv

source .venv/bin/activate

pip install -r requirements.txt

python song.pyThis was a fun little project. It was interesting to realize how conceptual music time has to be converted into literal time, down to when each note should stop and start, calculated from tempo and note length — something humans do without thinking about it. It also makes me curious how real sequencers like Bitwig handle concurrency and timing.